Using Noir with LM Studio

Learn how to integrate Noir with LM Studio to run local language models for code analysis. This guide shows you how to set up the LM Studio server and connect it to Noir.

Run local language models using LM Studio for private code analysis.

Setup

-

Install: Download from lmstudio.ai

-

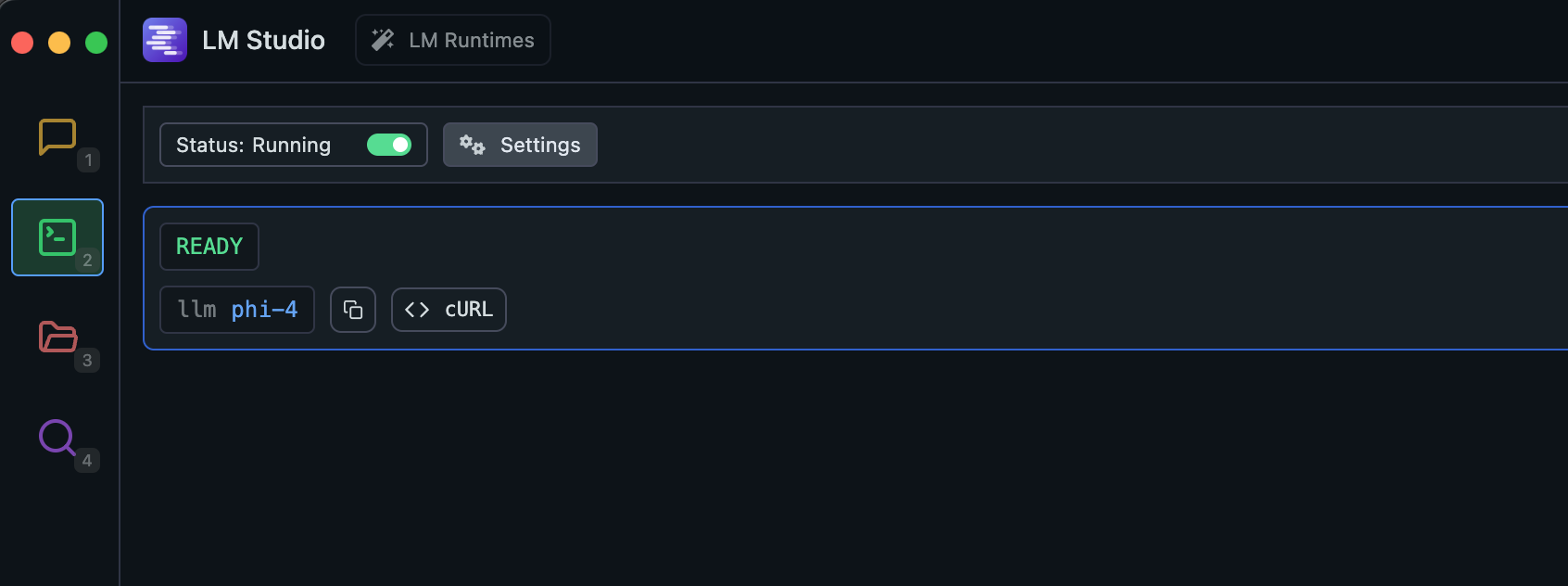

Start Server: Open LM Studio, select a model, navigate to "Local Server" tab, and click "Start Server"

Usage

Run Noir with LM Studio:

noir -b ./spec/functional_test/fixtures/hahwul \

--ai-provider=lmstudio \

--ai-model <MODEL_NAME>Replace <MODEL_NAME> with your LM Studio model name.